|

Size: 10437

Comment:

|

Size: 37865

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 1: | Line 1: |

| ## page was renamed from Cluster/CloudUsage | |

| Line 14: | Line 15: |

| This is a beta service, since the deployment and development is ongoing. However, to test the functionality, access to the infrastructure can be granted to certain users. We highly recommend to check this document frequently, since changes in the documentation may occur. Managing the cloud is made from the [[Cluster/Usage|GRIDUI Cluster]]. Ensure that you have the credentials properly installed by issuing the following command and checking that it returns something: {{{ $ echo $NOVA_API_KEY }}} It should return a string. If you do not see anything, please [[http://support.ifca.es|open an incidence]]. == Create a machine == To create a machine you have to perform several steps: * Decide which of the pre-built images you are going to use. * Decide which with of the available sizes is suitable for you. * Decide (and create if not ready) with keypair should be used to connect to the machine. |

This is a beta service, since the deployment and development is ongoing. However, access is granted to certain users to test the functionality. Check this document for updates frequenlty, since changes in the service may occur. IFCA uses !OpenStack (Folsom version) for managing the cloud service, which provides several ways to access: * [[http://portal.cloud.ifca.es|Web dashboard]] * [[http://api.openstack.org/|OpenStack API]], that can be used with the `nova` command line interface. * [[http://aws.amazon.com/ec2/|Amazon EC2 API]], available with the `euca` commands. This documents focuses on the usage of the `nova` command in the [[Cluster/Usage|GRIDUI Cluster]]. This is the recommended client tools to use with our infrastructure. However, we also support an EC2 API that can be used with the `euca` commnds. For a brief description of the `euca` commands and their `nova` equivalents see the [[#Euca_commands|Euca commands section]]. For the latest up-to-date documentation on the CLI tools, check the [[http://docs.openstack.org/cli/quick-start/content/index.html|Openstack official documentation]] == Access from the GRIDUI Cluster == Important: open a ticket to the [[http://support.ifca.es|helpdesk]] if you notice problems using cloud services (select CLOUD in the menu to open the ticket). First open a session in the griduisl6 node. Now you need to obtain your credentials in the dashboard of !OpenStack: login into [[http://portal.cloud.ifca.es|Web portal]] and go to your Access & Security area (the link is in the section Projects) and then to the API Access subsection. There you will find two different kind of credentials: * The !OpenStack Credentials (RC file) that can be used with the `nova` command * The EC2 Credentials can be used with `euca` tools. Download or copy the configuration file for !OpenStack to your grid account directory. Execute it in order to have the environment ready, providing the corresponding password: {{{ $ . openrc.sh Please enter your OpenStack Password: }}} Now you should be able to execute the commands to access the CLOUD resources. Try that your environment is correct with the `nova endpoints` command. It should return all the services available in the !OpenStack installation: {{{ $ nova endpoints +-------------+---------------------------------------------------------------+ | nova-volume | Value | +-------------+---------------------------------------------------------------+ | adminURL | http://cloud.ifca.es:8776/v1/c725b18d8d0643e7b410dc5a8d9ab554 | | internalURL | http://cloud.ifca.es:8776/v1/c725b18d8d0643e7b410dc5a8d9ab554 | | publicURL | http://cloud.ifca.es:8776/v1/c725b18d8d0643e7b410dc5a8d9ab554 | | region | RegionOne | +-------------+---------------------------------------------------------------+ +-------------+-------------------------------+ | glance | Value | +-------------+-------------------------------+ | adminURL | http://glance.ifca.es:9292/v1 | | internalURL | http://glance.ifca.es:9292/v1 | | publicURL | http://glance.ifca.es:9292/v1 | | region | RegionOne | +-------------+-------------------------------+ +-------------+-----------------------------------------------------------------+ | nova | Value | +-------------+-----------------------------------------------------------------+ | adminURL | http://cloud.ifca.es:8774/v1.1/c725b18d8d0643e7b410dc5a8d9ab554 | | internalURL | http://cloud.ifca.es:8774/v1.1/c725b18d8d0643e7b410dc5a8d9ab554 | | publicURL | http://cloud.ifca.es:8774/v1.1/c725b18d8d0643e7b410dc5a8d9ab554 | | region | RegionOne | | serviceName | nova | +-------------+-----------------------------------------------------------------+ +-------------+------------------------------------------+ | ec2 | Value | +-------------+------------------------------------------+ | adminURL | http://cloud.ifca.es:8773/services/Admin | | internalURL | http://cloud.ifca.es:8773/services/Cloud | | publicURL | http://cloud.ifca.es:8773/services/Cloud | | region | RegionOne | +-------------+------------------------------------------+ +-------------+------------------------------------+ | keystone | Value | +-------------+------------------------------------+ | adminURL | http://keystone.ifca.es:35357/v2.0 | | internalURL | http://keystone.ifca.es:5000/v2.0 | | publicURL | http://keystone.ifca.es:5000/v2.0 | | region | RegionOne | +-------------+------------------------------------+ }}} == Managing machines == The cloud service lets you instantiate virtual machines (VM) on demand. When you request the creation of a new VM, you can select the operating systems and the size (RAM, Disk, CPUs) that will be used to run the machine. In this section we will show how to discover which software and sizes are available and how to start a new virtual machine. === Keypairs === Before attempting to start a new virtual machine, you should have a keypair that will allow you to login into the machine once it is running. Normally you just need to create one keypair that can be reused for all your virtual machines (although you can create as many SSH credentials as you want). The `nova keypair-list` command shows your current keypairs. Initially the command should not return anything. In order to create a new key, use `nova keypair-add` with a name for the key you want to use redirecting the ouput to the file where you want to store that key. For example, for creating a key named `cloudkey` that will be stored in `cloudkey.pem`: {{{ $ nova keypair-add cloudkey > cloudkey.pem }}} Your recently created keypair should now appear in the list of available keypairs: {{{ $ nova keypair-list +----------+-------------------------------------------------+ | Name | Fingerprint | +----------+-------------------------------------------------+ | cloudkey | 37:fd:b6:73:59:78:fd:f2:7f:e7:9c:1b:9a:88:a5:cb | +----------+-------------------------------------------------+ }}} Make sure that you keep safe the file `cloudkey.pem`, since it will contain the private key needed to access your cloud machines. Set proper permissions to the key before using it with `chmod 600 clodkey.pem` (only user can read or write). If you need to delete one of your keypairs, use the `nova keypair-delete` command. === Images === The service lets you run VMs with different Operating Systems, you can list all the available ones with the `nova image-list` command. The `ID` of the image will be used as arguments for other commands. {{{ $ nova image-list +--------------------------------------+----------------------------------------------+--------+--------+ | ID | Name | Status | Server | +--------------------------------------+----------------------------------------------+--------+--------+ | 6b3046eb-4649-44d6-96c2-9a93d3aab8dc | Fedora 15 | ACTIVE | | | e803caa2-c247-4088-80fd-54e77b20a5cb | Fedora 15 initrd | ACTIVE | | | 6823e5b0-13fc-4ce3-afd8-057285820ed2 | Fedora 15 kernel | ACTIVE | | | 0249a9cc-dced-4c5f-91eb-d6900576206f | Fedora 17 | ACTIVE | | | f07c936f-7678-40e5-bbfd-f7142a5482ff | Fedora 17 initrd | ACTIVE | | | a0fbc138-1879-439f-8f78-9b98893778b3 | Fedora 17 kernel | ACTIVE | | | d3ac534d-d839-4b25-af92-c143930f3694 | Fedora 17 old glibc | ACTIVE | | | d1eec0f5-e948-435d-899c-d865320698d7 | IFCA Debian Wheezy (2011-08) JeOS | ACTIVE | | | cdbb6f8f-d10e-4e2b-879d-250d29fb9dbb | IFCA Scientific Linux 5.5 + PROOF v5.30.00 | ACTIVE | | | 6857ee01-2ba9-4846-b788-9e826dd9aaba | IFCA Scientific Linux 5.5 + ROOT v5.30.00 | ACTIVE | | | 18d99a06-c3e5-4157-a0e3-37ec34bdfc24 | IFCA Scientific Linux 5.5 JeOS | ACTIVE | | | 75896bad-05d3-45f6-9958-5940f82d0048 | IFCA Scientific Linux 6.2 JeOS | ACTIVE | | | 486c139e-f34d-465c-959c-1b9c8bf60cfd | IFCA Ubuntu Server 10.04 JeOS | ACTIVE | | | 694f2673-7ea3-4690-a25e-c9dd4297519a | IFCA Ubuntu Server 10.04 JeOS | ACTIVE | | | 66963875-5389-4048-b385-6f7e12a0915f | IFCA Ubuntu Server 11.10 JeOS | ACTIVE | | | 3ef6bb0c-6a17-47c9-a949-70256eb6651e | IFCA openSUSE 11.4 + Compilers | ACTIVE | | | daaed27e-6226-4295-8018-ad3b6b5210f6 | IFCA openSUSE 11.4 + Compilers + Mathematica | ACTIVE | | | 29233856-ed8e-4b61-ac81-898eb5e7c263 | IFCA openSUSE 11.4 JeOS | ACTIVE | | | f4e39219-ad13-495e-a35b-315a94675b0f | Ubuntu 11.10 kernel | ACTIVE | | | 369455d3-7f84-4630-b60c-e0ebf29a410c | Ubuntu 12.04 JeOS | ACTIVE | | | 4590d3b0-1df6-49a7-ae68-4dde83089b01 | Ubuntu Server 11.10 JeOS | ACTIVE | | | fea1838f-29a0-47dd-bd84-6c6cc6806ff3 | cloudpipe | ACTIVE | | | 6f02785c-5a39-4e1a-a7e3-75d48f0f0076 | ubuntu 12.04 kernel | ACTIVE | | +--------------------------------------+----------------------------------------------+--------+--------+ }}} The `nova image-show` can give you more details about a given image, for example the "IFCA Scientific Linux 5.5 JeOS", which has an ID `18d99a06-c3e5-4157-a0e3-37ec34bdfc24`: {{{ $ nova image-show 18d99a06-c3e5-4157-a0e3-37ec34bdfc24 +----------+--------------------------------------+ | Property | Value | +----------+--------------------------------------+ | created | 2012-01-30T10:12:22Z | | id | 18d99a06-c3e5-4157-a0e3-37ec34bdfc24 | | minDisk | 0 | | minRam | 0 | | name | IFCA Scientific Linux 5.5 JeOS | | progress | 100 | | status | ACTIVE | | updated | 2012-07-18T08:50:48Z | +----------+--------------------------------------+ }}} Information about some of these images is available at [[Cloud/Images]]. === Sizes === As in the case of the image to use, you can select the size of the VM to start. The list of available sizes (flavors in !OpenStack terminology) can be obtained with `nova flavor-list`: {{{ $ nova flavor-list +----+-----------+-----------+------+-----------+------+-------+-------------+-----------+-------------+ | ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public | extra_specs | +----+-----------+-----------+------+-----------+------+-------+-------------+-----------+-------------+ | 1 | m1.tiny | 512 | 0 | 0 | | 1 | 1.0 | N/A | {} | | 2 | m1.small | 2048 | 10 | 20 | | 1 | 1.0 | N/A | {} | | 3 | m1.medium | 4096 | 10 | 40 | | 2 | 1.0 | N/A | {} | | 4 | m1.large | 7000 | 10 | 80 | | 4 | 1.0 | N/A | {} | | 5 | m1.xlarge | 14000 | 10 | 160 | | 8 | 1.0 | N/A | {} | +----+-----------+-----------+------+-----------+------+-------+-------------+-----------+-------------+ }}} === Starting a machine === For starting a new VM, you need to specify one image, one size and a name for the new machine. Optionally, you can also spcify a keypair (it is always recommended to do so). The `nova boot` command lets you start the machine. For example, in order to create a VM that: * runs IFCA Scientific Linux 5.5 JeOS (ID `18d99a06-c3e5-4157-a0e3-37ec34bdfc24`) * of size `m1.tiny` * with the key `cloudkey` * and named `testVM` you would need to issue the following command: {{{ $ nova boot --flavor m1.tiny --image 18d99a06-c3e5-4157-a0e3-37ec34bdfc24 --key_name cloudkey testVM +------------------------+--------------------------------------+ | Property | Value | +------------------------+--------------------------------------+ | OS-DCF:diskConfig | MANUAL | | OS-EXT-STS:power_state | 0 | | OS-EXT-STS:task_state | scheduling | | OS-EXT-STS:vm_state | building | | accessIPv4 | | | accessIPv6 | | | adminPass | PGg4KxZo3Fn4 | | config_drive | | | created | 2012-09-28T10:02:02Z | | flavor | m1.tiny | | hostId | | | id | cd9e08b9-6899-4748-909a-2ff667ff1905 | | image | IFCA Scientific Linux 5.5 JeOS | | key_name | cloudkey | | metadata | {} | | name | testVM | | progress | 0 | | status | BUILD | | tenant_id | c725b18d8d0643e7b410dc5a8d9ab554 | | updated | 2012-09-28T10:02:03Z | | user_id | db66762e4fe148f8b8484c461a7a7182 | +------------------------+--------------------------------------+ }}} The `id` of the machine will allow you to query its status with `nova show`: {{{ $ nova show cd9e08b9-6899-4748-909a-2ff667ff1905 +------------------------+-----------------------------------------------------------------------+ | Property | Value | +------------------------+-----------------------------------------------------------------------+ | OS-DCF:diskConfig | MANUAL | | OS-EXT-STS:power_state | 2 | | OS-EXT-STS:task_state | None | | OS-EXT-STS:vm_state | active | | accessIPv4 | | | accessIPv6 | | | config_drive | | | created | 2012-09-28T10:02:02Z | | flavor | m1.tiny (1) | | hostId | 5ed92271869711d494f1326b9611825d5635ab659ea3e143c13ca8c6 | | id | cd9e08b9-6899-4748-909a-2ff667ff1905 | | image | IFCA Scientific Linux 5.5 JeOS (18d99a06-c3e5-4157-a0e3-37ec34bdfc24) | | key_name | cloudkey | | metadata | {} | | name | testVM | | private network | 172.16.2.8 | | progress | 0 | | status | ACTIVE | | tenant_id | c725b18d8d0643e7b410dc5a8d9ab554 | | updated | 2012-09-28T10:03:54Z | | user_id | db66762e4fe148f8b8484c461a7a7182 | +------------------------+-----------------------------------------------------------------------+ }}} Alternatively, you can use `nova list` to get the list of the current machines: {{{ $ nova list +--------------------------------------+--------------------------------------+--------+-------------------------------------+ | ID | Name | Status | Networks | +--------------------------------------+--------------------------------------+--------+-------------------------------------+ | 4b999e84-b37e-4b95-952d-3414ba271930 | c725b18d8d0643e7b410dc5a8d9ab554-vpn | ACTIVE | private=172.16.2.2 | | 3051dea4-0164-4a3a-9af2-14efe7ea93e9 | horizon_test | ACTIVE | private=172.16.2.11, 193.146.75.142 | | e02cee2d-c09f-4429-9724-91d7e10277ec | lbnl | ACTIVE | private=172.16.2.10 | | cd9e08b9-6899-4748-909a-2ff667ff1905 | testVM | ACTIVE | private=172.16.2.8 | +--------------------------------------+--------------------------------------+--------+-------------------------------------+ }}} === Connecting to the machine === Once the machine status is `ACTIVE`, it will be ready for using it. You can connect via ssh with your key. The IP address of the machine is shown in the `nova list` output. {{{ $ ssh -i cloudkey.pem root@172.16.2.8 Last login: Mon May 10 16:11:40 2010 [root@testvm ~]# }}} === VM Lifecycle === Your VM will be available until you explicitly destroy it. You can pause/reboot/resume/delete the machine with these commands: || Action || Command || || Reboot the VM || `nova reboot <id>` || || Pause the VM || `nova pause <id>` || || Suspend the VM || `nova suspend <id>` || || Resume the VM || `nova resume <id>` || || Delete the VM || `nova delete <id>` || Deleting the machine will destroy it and the contents of the disk will be lost. Make sure that all your data are stored in a permanent storage before deleting the machine. See the section on volumes for more information. {{{ $ nova delete cd9e08b9-6899-4748-909a-2ff667ff1905 $ nova list +--------------------------------------+--------------------------------------+--------+-------------------------------------+ | ID | Name | Status | Networks | +--------------------------------------+--------------------------------------+--------+-------------------------------------+ | 4b999e84-b37e-4b95-952d-3414ba271930 | c725b18d8d0643e7b410dc5a8d9ab554-vpn | ACTIVE | private=172.16.2.2 | | 3051dea4-0164-4a3a-9af2-14efe7ea93e9 | horizon_test | ACTIVE | private=172.16.2.11, 193.146.75.142 | | e02cee2d-c09f-4429-9724-91d7e10277ec | lbnl | ACTIVE | private=172.16.2.10 | +--------------------------------------+--------------------------------------+--------+-------------------------------------+ }}} {{{#!wiki caution '''Always delete your VMs once you do not need them anymore''' Otherwise your usage will be accounted and it will affect your quotas and billing. }}} == Permanent Storage: Volumes == The VMs use a temporary disk that is destroy when the machine is deleted. If you need permanent storage for your data, you can use Volumes. Volumes are raw block devices that can be created dynamically with a desired size. Volumes can be ''attached'' and ''detached'' from a running cloud VM to be used as a data disk (similarly to a usb stick that can be plug and unplugged to a computer). === Creating Volumes === The `nova volume-create` creates new volumes. You must specify the size (in GB) and optionally a name. In our case we will create a new volume with 5GB called `mydata` {{{ $ nova volume-create --display-name 'mydata' 5 +---------------------+----------------------------+ | Property | Value | +---------------------+----------------------------+ | attachments | [] | | availability_zone | nova | | created_at | 2012-09-28 15:16:44.590600 | | display_description | None | | display_name | mydata | | id | 14 | | metadata | {} | | size | 5 | | snapshot_id | None | | status | creating | | volume_type | None | +---------------------+----------------------------+ }}} The `nova volume-list` shows all available volumes: {{{ $ nova volume-list +----+-----------+--------------+------+-------------+-------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +----+-----------+--------------+------+-------------+-------------+ | 14 | available | mydata | 5 | None | | +----+-----------+--------------+------+-------------+-------------+ }}} The volume is now created and can be attached to a VM. === Attaching Volumes === Attaching is the process of associating a volume with a given instance, so the volume is seen as a new block device in the VM. The command to attach the volume is `nova volume-attach`, and the parameters are: * the id of the VM * the id of the volume * the local block device where the volume will be attached. These devices are in the form `/dev/xvd<DEVICE_LETTER>`, where `<DEVICE_LETTER>` goes from `c` to `z` (`/dev/xvdc`, `/dev/xvdd`, ..., `/dev/xvdz`) For example: {{{ $ nova volume-attach 9f870141-638e-4eb9-a2fa-ec770d1edb79 14 /dev/xvdc +----------+-------+ | Property | Value | +----------+-------+ | id | 14 | | volumeId | 14 | +----------+-------+ }}} `nova volume-list` should now show that the volume is attached: {{{ $ nova volume-list +----+--------+--------------+------+-------------+--------------------------------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +----+--------+--------------+------+-------------+--------------------------------------+ | 14 | in-use | mydata | 5 | None | 9f870141-638e-4eb9-a2fa-ec770d1edb79 | +----+--------+--------------+------+-------------+--------------------------------------+ }}} Log into your VM and check with `dmesg` that the volume is now attached: {{{ [root@testvm ~]# fdisk -l | grep Disk Disk /dev/xvda doesn't contain a valid partition table Disk /dev/xvdc doesn't contain a valid partition table Disk /dev/xvda: 3220 MB, 3220176896 bytes Disk identifier: 0x00000000 Disk /dev/xvdc: 5368 MB, 5368709120 bytes Disk identifier: 0x00000000 }}} {{{#!wiki caution Volumes are created without any kind of filesystem, you will need to create one the first time that you use it. A single ext4 partition should be enough for most use cases. You can create such filesystem with this command from your VM: `mkfs.ext4 /dev/xvdc` (change `/dev/xvdc` if needed) }}} Now you can mount your volume (for example in /srv) and start using it: {{{ [root@testvm ~]# mount -t ext4 -o sync /dev/xvdc /srv [root@testvm ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/xvda 3.0G 790M 2.1G 28% / tmpfs 245M 0 245M 0% /dev/shm /dev/xvdc 5.0G 138M 4.6G 3% /srv }}} === Detaching Volumes === Once you are done with the volume, you can umount it at your VM: {{{ [root@testvm ~]# umount /dev/xvdc }}} And detach it from the VM with `nova detach` with the VM and Volume id as arguments: {{{ $ nova volume-detach 9f870141-638e-4eb9-a2fa-ec770d1edb79 14 }}} The volume should appear again as available when you list it: {{{ $ nova volume-list +----+-----------+--------------+------+-------------+-------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +----+-----------+--------------+------+-------------+-------------+ | 14 | available | mydata | 5 | None | | +----+-----------+--------------+------+-------------+-------------+ }}} === Deleting volumes === You can reuse as many times as you like your volumes in your VMs, the data stored in them will persist after you have destroy your VMs. If you no longer need one of your volumes, you can do so with the `nova volume-delete` command. Once you delete a volume, you will not be able to access to its data again!. {{{ $ nova-delete 9 }}} == Networking == All created VMs have a private IP accessible from the GRIDUI. If you need access to the machine from outside GRIDUI, there are two alternatives: using a VPN or assigning public IPs to the VMs. === VPN === VPNs are currently being tested in the infrastructure. The documentation will be updated as soon as the features are available. === Public IPs === IFCA provides a pool of public IPs to use them in the cloud service. These can be allocated for your use and assigned to your VMs. Please note that the number of public IPs is limited! New IPs are created with `nova floating-ip-create`: {{{ $ nova floating-ip-create +----------------+-------------+----------+------+ | Ip | Instance Id | Fixed Ip | Pool | +----------------+-------------+----------+------+ | 193.146.75.142 | None | None | nova | +----------------+-------------+----------+------+ }}} You can get the list of the current available IPs with `nova floation-ip-list`: {{{ $ nova floating-ip-list +----------------+-------------+----------+------+ | Ip | Instance Id | Fixed Ip | Pool | +----------------+-------------+----------+------+ | 193.146.75.142 | None | None | nova | +----------------+-------------+----------+------+ }}} This newly allocated IP can now be associated to a running VM with `nova add-floating-ip <VM ID> <IP>`: {{{ $ nova add-floating-ip 9f870141-638e-4eb9-a2fa-ec770d1edb79 193.146.75.142 }}} The list command will show that the IP is assigned: {{{ $ nova floating-ip-list +----------------+--------------------------------------+------------+------+ | Ip | Instance Id | Fixed Ip | Pool | +----------------+--------------------------------------+------------+------+ | 193.146.75.142 | 9f870141-638e-4eb9-a2fa-ec770d1edb79 | 172.16.2.9 | nova | +----------------+--------------------------------------+------------+------+ }}} And you will be able to connect to the machine with this new IP: {{{ $ ssh -i cloudkey.pem root@193.146.75.142 The authenticity of host '193.146.75.142 (193.146.75.142)' can't be established. RSA key fingerprint is 29:80:9b:28:e7:8a:00:fe:6c:60:ef:e6:a6:71:33:bd. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '193.146.75.142' (RSA) to the list of known hosts. Last login: Fri Sep 28 16:54:40 2012 from gridui02.ifca.es [root@testvm ~]# }}} IPs can be disassociated from the VM with the `nova remove-floating-ip <VM ID> <IP>`: {{{ $ nova remove-floating-ip 9f870141-638e-4eb9-a2fa-ec770d1edb79 193.146.75.142 }}} IPs can be associated/disassociated as many times as needed to any VM you may have running. When no longer needed, release the IP address (i.e. it will no longer be available four your use): {{{ $ nova floating-ip-delete 193.146.75.142 }}} == Euca commands == This document is based in the `nova` native commands of !OpenStack, but you can also use the `euca-` commands that use the EC2 API instead of the !OpenStack one. Credentials for EC2 can be obtained from the web portal. You can download a zip file and unzip it into your GRIDUI home. It contains some certificates and a shell script with the variables needed for running the euca commands. Source it before attempting any command: {{{ $ . ec2rc.sh }}} The process for managing your VMs is practically identical, but you need to be aware that different identifiers are used at each system. For example, showing all the available images is performed with `euca-describe-images`. The id to use when starting the image is in the form `ami-xxxxxxxx`: {{{ $ euca-describe-images IMAGE ami-00000001 None (IFCA Scientific Linux 5.5 JeOS) available public machine instance-store IMAGE ami-00000002 None (IFCA Scientific Linux 5.5 + ROOT v5.30.00) available public machine instance-store IMAGE ami-00000003 None (IFCA Ubuntu Server 10.04 JeOS) available public machine instance-store IMAGE ami-00000004 None (IFCA Debian Wheezy (2011-08) JeOS) available publicmachine instance-store IMAGE ami-00000005 None (cloudpipe) available public machine instance-store IMAGE ami-00000006 None (IFCA Scientific Linux 5.5 + PROOF v5.30.00) available public machine instance-store IMAGE ami-00000007 None (IFCA Ubuntu Server 10.04 JeOS) available public machine instance-store IMAGE ami-00000008 None (IFCA openSUSE 11.4 JeOS) available public machine instance-store IMAGE ami-00000009 None (IFCA openSUSE 11.4 + Compilers) available public machine instance-store IMAGE ami-0000000a None (IFCA openSUSE 11.4 + Compilers + Mathematica) available public machine instance-store IMAGE ami-0000000b None (IFCA Ubuntu Server 11.10 JeOS) available public machine instance-store IMAGE ami-0000000c None (Fedora 15) available public machine aki-0000000d ari-0000000e instance-store IMAGE aki-0000000d None (Fedora 15 kernel) available private kernel instance-store IMAGE ari-0000000e None (Fedora 15 initrd) available private ramdisk instance-store IMAGE ari-00000011 None (Fedora 17 initrd) available private ramdisk instance-store IMAGE aki-00000010 None (Fedora 17 kernel) available private kernel instance-store IMAGE ami-0000000f None (Fedora 17) available public machine aki-00000010 ari-00000011 instance-store IMAGE ami-00000012 None (Fedora 17 old glibc) available public machine aki-00000010 ari-00000011 instance-store IMAGE ami-00000017 None (IFCA Scientific Linux 6.2 JeOS) available public machine instance-store IMAGE aki-00000023 None (ubuntu 12.04 kernel) available public kernel instance-store IMAGE ami-00000022 None (Ubuntu 12.04 JeOS) available public machine instance-store IMAGE aki-00000025 None (Ubuntu 11.10 kernel) available public kernel instance-store IMAGE ami-00000024 None (Ubuntu Server 11.10 JeOS) available public machine aki-00000025 instance-store }}} Booting a VM with: * runs IFCA Scientific Linux 5.5 JeOS (ID `ami-00000001`) * of size `m1.tiny` * with the key `cloudkey` {{{ $ euca-run-instances -k cloudkey -t m1.tiny ami-00000001 RESERVATION r-d4722tgt c725b18d8d0643e7b410dc5a8d9ab554 default INSTANCE i-00000203 ami-00000001 server-515 server-515 pending cloudkey 2012-10-01T08:46:42.000Z None None }}} Again, note the different kind of identifiers. Any other euca commands referring to this VM should use `i-00000203` as identifier. The following table summarizes the main commands and their euca equivalents. || Action || nova || euca || || Create a keypair named `cloudkey` || `nova keypair-add cloudkey > cloudkey.pem` || `euca-add-keypair cloudkey > cloudkey.pem` || || List keypairs || `nova keypair-list` || `euca-describe-keypairs` || || List images || `nova image-list` || `euca-describe-images` || || List sizes || `nova flavor-list` || Not available || || Start VM || `nova boot --flavor <flavor_name> --image <image_id> --key-name <key_name> <VM_NAME>` || `euca-run-instances -t <flavor_name> -k <key_name> ami-<AMI>` || || List VMs || `nova list` || `euca-describe-instances` || || Show VM details || `nova show <vm_id>` || `euca-describe-instances i-<vm_id>` || || Delete VM || `nova delete <vm_id>` || `euca-terminate-instances i-<vm_id>` || || Create Volume || `nova volume-create <size in GB>` || `euca-create-volume -s <size in GB>` || || List Volume || `nova volume-list` || `euca-describe-volumes` || || Attach Volume || `nova volume-attach <vm_id> <vol_id> <local device>` || `euca-attach-volume -i i-<vm_id> -d <local device> vol-<vol_id>` || || Detach Volume || `nova volume-detach <vm_id> <vol_id>` || `euca-detach-volme vol-<vol_id>` || || Allocate IP || `nova floating-ip-create` || `euca-allocate-address` || || Associate IP || `nova add-floating-ip <vm_id> <IP>` || `euca-associate-address -i i-<vm_id> <IP>` || || List IPs || `nova floation-ip-list` || `euca-describe-addresses` || || Disassociate IP || `nova remove-floating-ip <vm_id> <IP>` || `euca-disassociate-address <IP>` || || Release IP || `nova floating-ip-delete <IP>` || `euca-release-address <IP>` || == Using the web portal == The !OpenStack dashboard lets you perform all the operations described in this manual from your web browser. '''NEDS REVISION''' === Creating a Machine with OpenStack === Go to http://portal.cloud.ifca.es to access to OpenStack system, which lets you to create a new image in the cloud. |

| Line 35: | Line 596: |

==== Image selection ==== There are several pre-built images available. To check them, use the `euca-describe-images` command: {{{ $ euca-describe-images IMAGE ami-00000008 None (cloudpipe) available public machine instance-store IMAGE ami-00000007 None (Debian Wheezy (2011-08)) available public machine instance-store IMAGE ami-00000006 None (lucid-server-uec-amd64.img) available public machine instance-store IMAGE ami-00000003 None (Scientific Linux 5.5) available public machine instance-store IMAGE ami-00000001 None (Scientific Linux 5.5) available public machine instance-store }}} Once you have decided with image to use, write down its identifier (ami-XXXXXXXX). ==== Instance types ==== You can choose the size of your machine (i.e. how many CPUs and how much memory) from the following instance types: ===== Standard machines ===== || '''Name''' || '''Memory''' || '''# CPU''' || '''Local storage''' || '''Swap''' || || m1.tiny || 512MB || 1 || 0GB || 0GB|| || m1.small || 2048MB || 1 || 20GB || 0GB|| || m1.medium || 4096MB || 2 || 40GB || 0GB|| || m1.large || 8192MB || 4 || 80GB || 0GB|| || m1.xlarge || 16384MB || 8 || 160GB || 0GB|| ===== High-memory machines ===== || '''Name''' || '''Memory''' || '''# CPU''' || '''Local storage''' || '''Swap''' || || m2.8g || 8192MG || 1 || 10GB || 0GB|| |

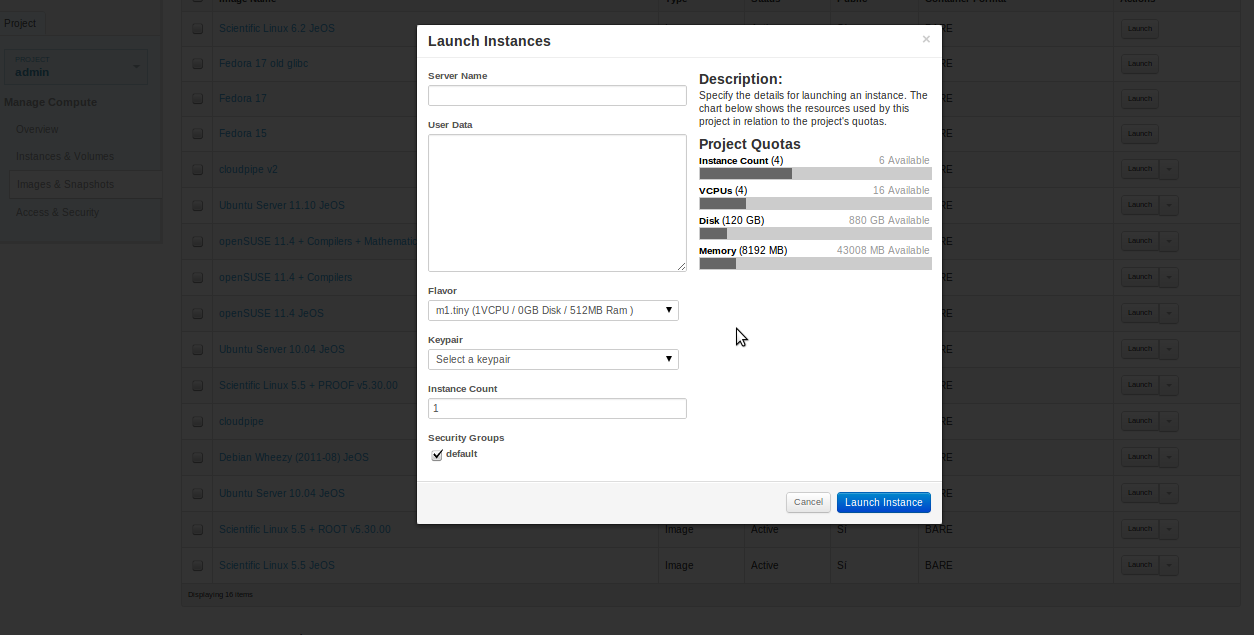

You should launch the image that you want to use (within a list of OS) and click “Launch”. A new popup window will be shown and you have to choose the configuration of the system (requirements, name of the server...). {{attachment:openstack2.png|alt text|width=600}} |

| Line 69: | Line 602: |

| For most of the users this is a one-time step (although you can create as much SSH credentials as you want). You have to create an SSH-keypair so as to inject it to the newly created machine with the following command (it will create a keypair named `cloudkey` and store it under `~/.cloud/cloudkey.pem`): {{{ $ euca-add-keypair cloudkey > ~/.cloud/cloudkey.pem }}} Make sure that you keep safe the file `~/.cloud/cloudkey.pem`, since it will contain the private key needed to access your cloud machines. You can check the name later with the `euca-describe-keypairs` command. === Launching the instance === To launch the instance, you have to issue `euca-run-instances`, specifying: * wich keypair to use (in the example `cloudkey`). * wich size should be used (in the example `m1.tiny`). * with image should be used (in the example `ami-00000001`). {{{ $ euca-run-instances -k cloudkey -t m1.tiny ami-00000001 RESERVATION r-1zdwog0m ACES default INSTANCE i-00000048 ami-00000001 scheduling cloudkey (ACES, None) 2011-09-02T12:19:41Z None None }}} You can check its status with `euca-describe-instances` {{{ $ euca-describe-instances i-00000048 RESERVATION r-vmfu1xq2 ACES default INSTANCE i-00000048 ami-00000001 172.16.1.8 172.16.1.8 blocked cloudkey (ACES, cloud01) 0 m1.tiny 2011-09-02T12:15:32Z nova }}} |

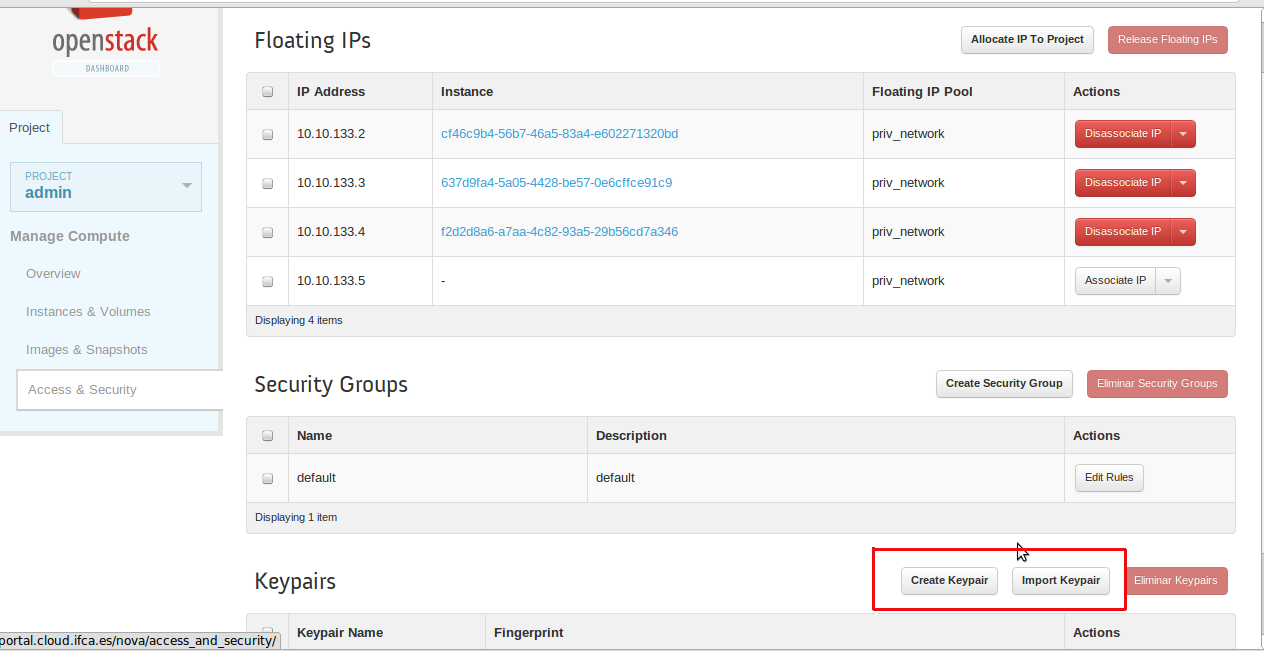

You must import or create a new key in order to access to that image. To do so go to “Access & Security” tab and click on Create or Import Keypair. {{attachment:openstack1.png|alt text|width=600}} |

| Line 100: | Line 608: |

| === Authorize SSH connections and ping === If you decide not to use a VPN, but connect to your machines trough the GRIDUI cluster, you have to authorize such connections with: {{{ $ euca-authorize -P tcp -p 22 default $ euca-authorize -P icmp -t -1:-1 default }}} |

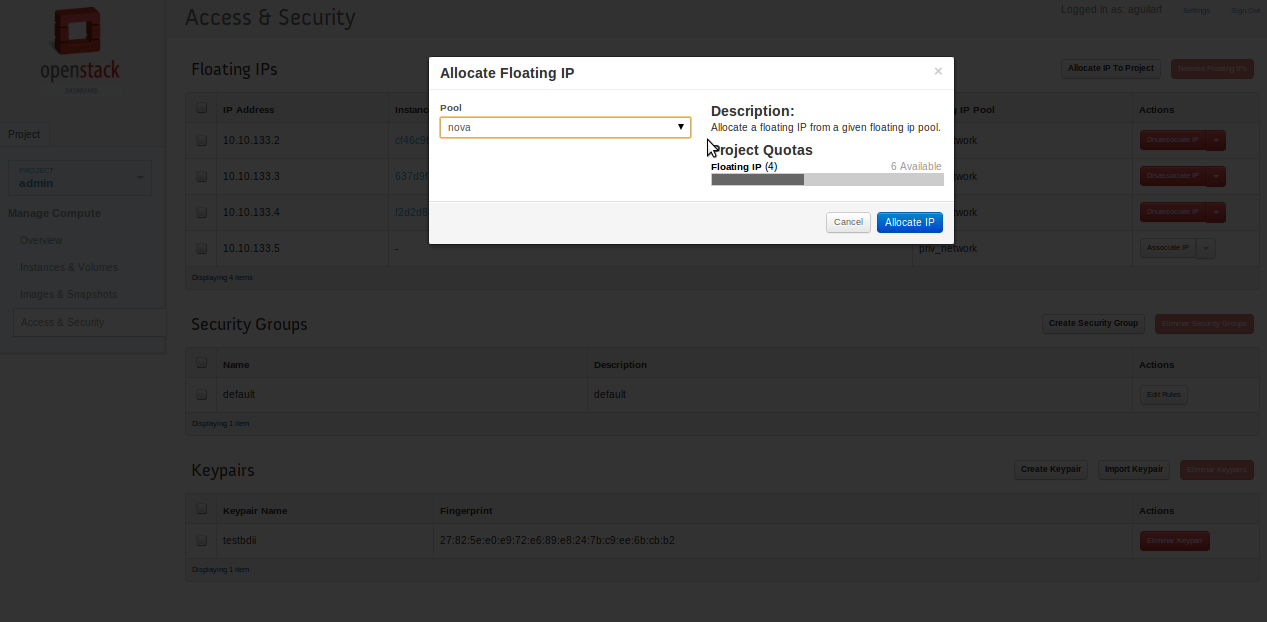

In order to access throw ssh to the image, you must asign an IP to the instance. Click on “Access & Security” again and select “Allocate IP to project”. Choose the type of IP that you want to use and click “Allocate IP”. After that, you need to link that IP with your new image. Click on the button “Associate IP” of your new IP and select the instance that you have just created. {{attachment:openstack3.png|alt text|width=600}} |

| Line 111: | Line 614: |

| You have to use the private identify file that you created before (`~/.cloud/cloudkey.pem`) and pass it to the SSH client. To check the IP to with you should connect, check it with `euca-describe-instances` {{{ $ ssh -i ~/.cloud/cloudkey.pem root@172.16.1.8 }}} === Stopping the server === Images can be stopped with `euca-terminate-instances` {{{ $ euca-terminate-instances i-00000048 }}} == Using Cloud Storage == The storage in the cloud use volumes. Volumes are raw block devices that can be created dynamically with a desired size and associated with cloud images to be used as data disk. After use the data in the volume you can detach from the image and save for a later use of the persisted data. === Creating a Volume === To create a volume you have to run `euca-create-volume` command. For instance, to create a volume that is 100GB in size: {{{ $ euca-create-volume -s 100 -z nova VOLUME vol-00000001 10 creating 2015-11-29 }}} === Using a Volume in an instance === You may attach block volumes to instances using `euca-attach-volume`. You will need to specify the local block device name (this will be used inside the instance) and the identified instance. Currently the devices to attach the volume should be `/dev/xvdc`, `/dev/xvdd`,... `/dev/xvdz`. Attaching volume `vol-00000001` to `image i-00000001` in device /dev/xvdc si done with: {{{ $ euca-attach-volume -i i-00000001 -d /dev/xvdc vol-00000001 }}} You can see the volume attached to the image with the command `euca-describe-volumes`. {{{ $ euca-describe-volumes VOLUME vol-00000001 100 nova in-use 2015-11-29 ATTACHMENT vol-0000000c i-00000051 /dev/xvdc }}} You can then use the new volume inside your running instance. As an example, the usage of the volume as an `ext4` filesystem in a Ubuntu image is described below. 1. log into the image and check that the device is visible (as either `root` or as `ubuntu` user and use `sudo` for commands): . {{{ server-1 $ sudo fdisk -l | grep Disk Disk /dev/xvda doesn't contain a valid partition table Disk /dev/xvdb doesn't contain a valid partition table Disk /dev/xvdc doesn't contain a valid partition table Disk /dev/xvda: 10.7 GB, 10737418240 bytes Disk identifier: 0x00000000 Disk /dev/xvdb: 21.5 GB, 21474836480 bytes Disk identifier: 0x00000000 Disk /dev/xvdc: 107.4 GB, 107374182400 bytes Disk identifier: 0x00000000 }}} 1. Create a single ext4 partition on the device, an mount in the /srv mount point (-o sync is safe in case of image crashs): . {{{ server-1 $ sudo mkfs.ext4 /dev/xvdc (...) server-1 $ sudo mount -t ext4 -o sync /dev/xvdc /srv }}} 1. Check that the volume is visible as a mounted filesystem: . {{{ server-1 $ df -h Filesystem Size Used Avail Use% Mounted on /dev/xvda 9.9G 622M 8.8G 7% / none 996M 144K 995M 1% /dev none 1001M 0 1001M 0% /dev/shm none 1001M 48K 1001M 1% /var/run none 1001M 0 1001M 0% /var/lock none 1001M 0 1001M 0% /lib/init/rw /dev/xvdb 20G 173M 19G 1% /mnt /dev/xvdc 99G 188M 94G 1% /srv }}} After you are done with the volume yo can detach from image with (you should `umount` it first on your instance): {{{ $ euca-detach-volume vol-00000001 }}} You must detach a volume before terminating an instance or deleting a volume. If you fail to detach a volume, it may leave the volume in an inconsistent state and you risk losing data. === Reusing an Old Volume === Attach with the new image: {{{ $ euca-attach-volume -i i-00000002 -d /dev/xvdc vol-00000001 }}} Because the filesystem is already created on the volume, you only need to mount it to access: {{{ server-1 $ sudo mount -t ext4 -o sync /dev/xvdc /srv }}} After you are done with the volume yo can detach from image: {{{ $ euca-detach-volume vol-00000001 }}} === Other uses of Volumes === With volume you can create snapshots of the data, recover it, delete volumes,...etc. More on volumes [[http://open.eucalyptus.com/wiki/Euca2oolsStorage]] == Advanced topics == === Attach to the project's VPN === Each project has a VPN assigned to it. You can attach any computer to it, thus having it connected to your project's internal network. So as to do so, you have to perform several steps (instructions only for GNU/Linux): 1. Copy your `~.cloud` to the machine that you want to attach to your project's VPN. 1. Install [[https://www.openvpn.net/|OpenVPN]] on that machine. 1. Launch openvpn with the `nova-vpn.conf` configuration file. {{{ # cd cloud_credentials # openvpn --config nova-vpn.conf }}} Please note that there are several paths in the `nova-vpn.conf` configuration file that are relative to the directory in which it is located. Should you wish to use different/separated paths, please edit `nova-vpn.conf` and adjust the `cert`, `key` and `ca` parameters. MacOS users may use [[http://code.google.com/p/tunnelblick/| Tunnelblick]] (a GUI interface to OpenVPN) that can use the `nova-vpn.conf` and certificate files without any changes. ==== VPN with Ubuntu 10.04 ==== 1. Install network-manager-openvpn package 1. Add to /etc/dbus-1/system.d/nm-openvpn-service.conf between `policy root` and `policy default`: {{{ <policy user=”at_console”> <allow own=”org.freedesktop.NetworkManager.vpnc”/> <allow send_destination=”org.freedesktop.NetworkManager.vpnc”/> </policy> }}} 1. With the network configuration in gnome bar, add new VPN conection importing nova-vpn.conf 1. Edit VPN conection, inside routing options, use this conection only for own resource. 1. Restart computer to get all changes in. Now you can activate/deactivate VPN from gnome bar. # === Manage multiple credentials === # and checking that `NOVA_API`, `NOVA_CERT`, `NOVA_PROJECT`, `NOVA_URL`, `NOVA_USERNAME`, |

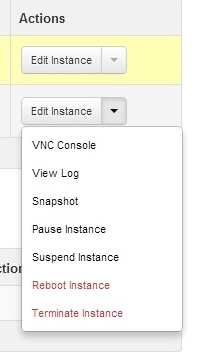

Last step is to download the keypair that you have created or imported and move it to the machine that you will use to conect to the instance (gridui.ifca.es). Change permission to 600 and use the following command to connect: {{{ $ ssh -i clave.pem root@cloud.image.IP }}} Done === VM Lifecycle === You can access your VM until you destroy it. Management is allowed thorugh some actions that you can see below: {{attachment:VMoptions.jpg|alt text|width=200}} || Action || Explanation || || View Log || Shows system log in the browser || || Snapshot || Creates a launchable new copy of a VM || || Pause Instance || Pause VM without shutting down it || || Suspend Instance || Shutdown VM. You can keep using it again from the point you suspend it. || || Reboot Instance || Reboot VM || || Terminate Instance || DESTROY VM. Once you click on this action, this VM won't be available anymore. || |

Cloud Computing at IFCA

This is a beta service

Please note that we are currently deploying the Cloud infrastructure at IFCA, so work is still in progress. If you find any error, please open a ticket on the helpdesk.

Contents

1. Introduction

This is a beta service, since the deployment and development is ongoing. However, access is granted to certain users to test the functionality.

Check this document for updates frequenlty, since changes in the service may occur.

IFCA uses OpenStack (Folsom version) for managing the cloud service, which provides several ways to access:

OpenStack API, that can be used with the nova command line interface.

Amazon EC2 API, available with the euca commands.

This documents focuses on the usage of the nova command in the GRIDUI Cluster. This is the recommended client tools to use with our infrastructure. However, we also support an EC2 API that can be used with the euca commnds. For a brief description of the euca commands and their nova equivalents see the Euca commands section.

For the latest up-to-date documentation on the CLI tools, check the Openstack official documentation

2. Access from the GRIDUI Cluster

Important: open a ticket to the helpdesk if you notice problems using cloud services (select CLOUD in the menu to open the ticket).

First open a session in the griduisl6 node.

Now you need to obtain your credentials in the dashboard of OpenStack: login into Web portal and go to your Access & Security area (the link is in the section Projects) and then to the API Access subsection. There you will find two different kind of credentials:

The OpenStack Credentials (RC file) that can be used with the nova command

The EC2 Credentials can be used with euca tools.

Download or copy the configuration file for OpenStack to your grid account directory. Execute it in order to have the environment ready, providing the corresponding password:

$ . openrc.sh Please enter your OpenStack Password:

Now you should be able to execute the commands to access the CLOUD resources.

Try that your environment is correct with the nova endpoints command. It should return all the services available in the OpenStack installation:

$ nova endpoints +-------------+---------------------------------------------------------------+ | nova-volume | Value | +-------------+---------------------------------------------------------------+ | adminURL | http://cloud.ifca.es:8776/v1/c725b18d8d0643e7b410dc5a8d9ab554 | | internalURL | http://cloud.ifca.es:8776/v1/c725b18d8d0643e7b410dc5a8d9ab554 | | publicURL | http://cloud.ifca.es:8776/v1/c725b18d8d0643e7b410dc5a8d9ab554 | | region | RegionOne | +-------------+---------------------------------------------------------------+ +-------------+-------------------------------+ | glance | Value | +-------------+-------------------------------+ | adminURL | http://glance.ifca.es:9292/v1 | | internalURL | http://glance.ifca.es:9292/v1 | | publicURL | http://glance.ifca.es:9292/v1 | | region | RegionOne | +-------------+-------------------------------+ +-------------+-----------------------------------------------------------------+ | nova | Value | +-------------+-----------------------------------------------------------------+ | adminURL | http://cloud.ifca.es:8774/v1.1/c725b18d8d0643e7b410dc5a8d9ab554 | | internalURL | http://cloud.ifca.es:8774/v1.1/c725b18d8d0643e7b410dc5a8d9ab554 | | publicURL | http://cloud.ifca.es:8774/v1.1/c725b18d8d0643e7b410dc5a8d9ab554 | | region | RegionOne | | serviceName | nova | +-------------+-----------------------------------------------------------------+ +-------------+------------------------------------------+ | ec2 | Value | +-------------+------------------------------------------+ | adminURL | http://cloud.ifca.es:8773/services/Admin | | internalURL | http://cloud.ifca.es:8773/services/Cloud | | publicURL | http://cloud.ifca.es:8773/services/Cloud | | region | RegionOne | +-------------+------------------------------------------+ +-------------+------------------------------------+ | keystone | Value | +-------------+------------------------------------+ | adminURL | http://keystone.ifca.es:35357/v2.0 | | internalURL | http://keystone.ifca.es:5000/v2.0 | | publicURL | http://keystone.ifca.es:5000/v2.0 | | region | RegionOne | +-------------+------------------------------------+

3. Managing machines

The cloud service lets you instantiate virtual machines (VM) on demand. When you request the creation of a new VM, you can select the operating systems and the size (RAM, Disk, CPUs) that will be used to run the machine. In this section we will show how to discover which software and sizes are available and how to start a new virtual machine.

3.1. Keypairs

Before attempting to start a new virtual machine, you should have a keypair that will allow you to login into the machine once it is running. Normally you just need to create one keypair that can be reused for all your virtual machines (although you can create as many SSH credentials as you want).

The nova keypair-list command shows your current keypairs. Initially the command should not return anything.

In order to create a new key, use nova keypair-add with a name for the key you want to use redirecting the ouput to the file where you want to store that key. For example, for creating a key named cloudkey that will be stored in cloudkey.pem:

$ nova keypair-add cloudkey > cloudkey.pem

Your recently created keypair should now appear in the list of available keypairs:

$ nova keypair-list +----------+-------------------------------------------------+ | Name | Fingerprint | +----------+-------------------------------------------------+ | cloudkey | 37:fd:b6:73:59:78:fd:f2:7f:e7:9c:1b:9a:88:a5:cb | +----------+-------------------------------------------------+

Make sure that you keep safe the file cloudkey.pem, since it will contain the private key needed to access your cloud machines. Set proper permissions to the key before using it with chmod 600 clodkey.pem (only user can read or write). If you need to delete one of your keypairs, use the nova keypair-delete command.

3.2. Images

The service lets you run VMs with different Operating Systems, you can list all the available ones with the nova image-list command. The ID of the image will be used as arguments for other commands.

$ nova image-list +--------------------------------------+----------------------------------------------+--------+--------+ | ID | Name | Status | Server | +--------------------------------------+----------------------------------------------+--------+--------+ | 6b3046eb-4649-44d6-96c2-9a93d3aab8dc | Fedora 15 | ACTIVE | | | e803caa2-c247-4088-80fd-54e77b20a5cb | Fedora 15 initrd | ACTIVE | | | 6823e5b0-13fc-4ce3-afd8-057285820ed2 | Fedora 15 kernel | ACTIVE | | | 0249a9cc-dced-4c5f-91eb-d6900576206f | Fedora 17 | ACTIVE | | | f07c936f-7678-40e5-bbfd-f7142a5482ff | Fedora 17 initrd | ACTIVE | | | a0fbc138-1879-439f-8f78-9b98893778b3 | Fedora 17 kernel | ACTIVE | | | d3ac534d-d839-4b25-af92-c143930f3694 | Fedora 17 old glibc | ACTIVE | | | d1eec0f5-e948-435d-899c-d865320698d7 | IFCA Debian Wheezy (2011-08) JeOS | ACTIVE | | | cdbb6f8f-d10e-4e2b-879d-250d29fb9dbb | IFCA Scientific Linux 5.5 + PROOF v5.30.00 | ACTIVE | | | 6857ee01-2ba9-4846-b788-9e826dd9aaba | IFCA Scientific Linux 5.5 + ROOT v5.30.00 | ACTIVE | | | 18d99a06-c3e5-4157-a0e3-37ec34bdfc24 | IFCA Scientific Linux 5.5 JeOS | ACTIVE | | | 75896bad-05d3-45f6-9958-5940f82d0048 | IFCA Scientific Linux 6.2 JeOS | ACTIVE | | | 486c139e-f34d-465c-959c-1b9c8bf60cfd | IFCA Ubuntu Server 10.04 JeOS | ACTIVE | | | 694f2673-7ea3-4690-a25e-c9dd4297519a | IFCA Ubuntu Server 10.04 JeOS | ACTIVE | | | 66963875-5389-4048-b385-6f7e12a0915f | IFCA Ubuntu Server 11.10 JeOS | ACTIVE | | | 3ef6bb0c-6a17-47c9-a949-70256eb6651e | IFCA openSUSE 11.4 + Compilers | ACTIVE | | | daaed27e-6226-4295-8018-ad3b6b5210f6 | IFCA openSUSE 11.4 + Compilers + Mathematica | ACTIVE | | | 29233856-ed8e-4b61-ac81-898eb5e7c263 | IFCA openSUSE 11.4 JeOS | ACTIVE | | | f4e39219-ad13-495e-a35b-315a94675b0f | Ubuntu 11.10 kernel | ACTIVE | | | 369455d3-7f84-4630-b60c-e0ebf29a410c | Ubuntu 12.04 JeOS | ACTIVE | | | 4590d3b0-1df6-49a7-ae68-4dde83089b01 | Ubuntu Server 11.10 JeOS | ACTIVE | | | fea1838f-29a0-47dd-bd84-6c6cc6806ff3 | cloudpipe | ACTIVE | | | 6f02785c-5a39-4e1a-a7e3-75d48f0f0076 | ubuntu 12.04 kernel | ACTIVE | | +--------------------------------------+----------------------------------------------+--------+--------+

The nova image-show can give you more details about a given image, for example the "IFCA Scientific Linux 5.5 JeOS", which has an ID 18d99a06-c3e5-4157-a0e3-37ec34bdfc24:

$ nova image-show 18d99a06-c3e5-4157-a0e3-37ec34bdfc24 +----------+--------------------------------------+ | Property | Value | +----------+--------------------------------------+ | created | 2012-01-30T10:12:22Z | | id | 18d99a06-c3e5-4157-a0e3-37ec34bdfc24 | | minDisk | 0 | | minRam | 0 | | name | IFCA Scientific Linux 5.5 JeOS | | progress | 100 | | status | ACTIVE | | updated | 2012-07-18T08:50:48Z | +----------+--------------------------------------+

Information about some of these images is available at Cloud/Images.

3.3. Sizes

As in the case of the image to use, you can select the size of the VM to start. The list of available sizes (flavors in OpenStack terminology) can be obtained with nova flavor-list:

$ nova flavor-list

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+-------------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public | extra_specs |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+-------------+

| 1 | m1.tiny | 512 | 0 | 0 | | 1 | 1.0 | N/A | {} |

| 2 | m1.small | 2048 | 10 | 20 | | 1 | 1.0 | N/A | {} |

| 3 | m1.medium | 4096 | 10 | 40 | | 2 | 1.0 | N/A | {} |

| 4 | m1.large | 7000 | 10 | 80 | | 4 | 1.0 | N/A | {} |

| 5 | m1.xlarge | 14000 | 10 | 160 | | 8 | 1.0 | N/A | {} |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+-------------+

3.4. Starting a machine

For starting a new VM, you need to specify one image, one size and a name for the new machine. Optionally, you can also spcify a keypair (it is always recommended to do so). The nova boot command lets you start the machine. For example, in order to create a VM that:

runs IFCA Scientific Linux 5.5 JeOS (ID 18d99a06-c3e5-4157-a0e3-37ec34bdfc24)

of size m1.tiny

with the key cloudkey

and named testVM

you would need to issue the following command:

$ nova boot --flavor m1.tiny --image 18d99a06-c3e5-4157-a0e3-37ec34bdfc24 --key_name cloudkey testVM

+------------------------+--------------------------------------+

| Property | Value |

+------------------------+--------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-STS:task_state | scheduling |

| OS-EXT-STS:vm_state | building |

| accessIPv4 | |

| accessIPv6 | |

| adminPass | PGg4KxZo3Fn4 |

| config_drive | |

| created | 2012-09-28T10:02:02Z |

| flavor | m1.tiny |

| hostId | |

| id | cd9e08b9-6899-4748-909a-2ff667ff1905 |

| image | IFCA Scientific Linux 5.5 JeOS |

| key_name | cloudkey |

| metadata | {} |

| name | testVM |

| progress | 0 |

| status | BUILD |

| tenant_id | c725b18d8d0643e7b410dc5a8d9ab554 |

| updated | 2012-09-28T10:02:03Z |

| user_id | db66762e4fe148f8b8484c461a7a7182 |

+------------------------+--------------------------------------+The id of the machine will allow you to query its status with nova show:

$ nova show cd9e08b9-6899-4748-909a-2ff667ff1905

+------------------------+-----------------------------------------------------------------------+

| Property | Value |

+------------------------+-----------------------------------------------------------------------+

| OS-DCF:diskConfig | MANUAL |

| OS-EXT-STS:power_state | 2 |

| OS-EXT-STS:task_state | None |

| OS-EXT-STS:vm_state | active |

| accessIPv4 | |

| accessIPv6 | |

| config_drive | |

| created | 2012-09-28T10:02:02Z |

| flavor | m1.tiny (1) |

| hostId | 5ed92271869711d494f1326b9611825d5635ab659ea3e143c13ca8c6 |

| id | cd9e08b9-6899-4748-909a-2ff667ff1905 |

| image | IFCA Scientific Linux 5.5 JeOS (18d99a06-c3e5-4157-a0e3-37ec34bdfc24) |

| key_name | cloudkey |

| metadata | {} |

| name | testVM |

| private network | 172.16.2.8 |

| progress | 0 |

| status | ACTIVE |

| tenant_id | c725b18d8d0643e7b410dc5a8d9ab554 |

| updated | 2012-09-28T10:03:54Z |

| user_id | db66762e4fe148f8b8484c461a7a7182 |

+------------------------+-----------------------------------------------------------------------+Alternatively, you can use nova list to get the list of the current machines:

$ nova list +--------------------------------------+--------------------------------------+--------+-------------------------------------+ | ID | Name | Status | Networks | +--------------------------------------+--------------------------------------+--------+-------------------------------------+ | 4b999e84-b37e-4b95-952d-3414ba271930 | c725b18d8d0643e7b410dc5a8d9ab554-vpn | ACTIVE | private=172.16.2.2 | | 3051dea4-0164-4a3a-9af2-14efe7ea93e9 | horizon_test | ACTIVE | private=172.16.2.11, 193.146.75.142 | | e02cee2d-c09f-4429-9724-91d7e10277ec | lbnl | ACTIVE | private=172.16.2.10 | | cd9e08b9-6899-4748-909a-2ff667ff1905 | testVM | ACTIVE | private=172.16.2.8 | +--------------------------------------+--------------------------------------+--------+-------------------------------------+

3.5. Connecting to the machine

Once the machine status is ACTIVE, it will be ready for using it. You can connect via ssh with your key. The IP address of the machine is shown in the nova list output.

$ ssh -i cloudkey.pem root@172.16.2.8 Last login: Mon May 10 16:11:40 2010 [root@testvm ~]#

3.6. VM Lifecycle

Your VM will be available until you explicitly destroy it. You can pause/reboot/resume/delete the machine with these commands:

Action |

Command |

Reboot the VM |

nova reboot <id> |

Pause the VM |

nova pause <id> |

Suspend the VM |

nova suspend <id> |

Resume the VM |

nova resume <id> |

Delete the VM |

nova delete <id> |

Deleting the machine will destroy it and the contents of the disk will be lost. Make sure that all your data are stored in a permanent storage before deleting the machine. See the section on volumes for more information.

$ nova delete cd9e08b9-6899-4748-909a-2ff667ff1905 $ nova list +--------------------------------------+--------------------------------------+--------+-------------------------------------+ | ID | Name | Status | Networks | +--------------------------------------+--------------------------------------+--------+-------------------------------------+ | 4b999e84-b37e-4b95-952d-3414ba271930 | c725b18d8d0643e7b410dc5a8d9ab554-vpn | ACTIVE | private=172.16.2.2 | | 3051dea4-0164-4a3a-9af2-14efe7ea93e9 | horizon_test | ACTIVE | private=172.16.2.11, 193.146.75.142 | | e02cee2d-c09f-4429-9724-91d7e10277ec | lbnl | ACTIVE | private=172.16.2.10 | +--------------------------------------+--------------------------------------+--------+-------------------------------------+

Always delete your VMs once you do not need them anymore

Otherwise your usage will be accounted and it will affect your quotas and billing.

4. Permanent Storage: Volumes

The VMs use a temporary disk that is destroy when the machine is deleted. If you need permanent storage for your data, you can use Volumes. Volumes are raw block devices that can be created dynamically with a desired size. Volumes can be attached and detached from a running cloud VM to be used as a data disk (similarly to a usb stick that can be plug and unplugged to a computer).

4.1. Creating Volumes

The nova volume-create creates new volumes. You must specify the size (in GB) and optionally a name. In our case we will create a new volume with 5GB called mydata

$ nova volume-create --display-name 'mydata' 5

+---------------------+----------------------------+

| Property | Value |

+---------------------+----------------------------+

| attachments | [] |

| availability_zone | nova |

| created_at | 2012-09-28 15:16:44.590600 |

| display_description | None |

| display_name | mydata |

| id | 14 |

| metadata | {} |

| size | 5 |

| snapshot_id | None |

| status | creating |

| volume_type | None |

+---------------------+----------------------------+The nova volume-list shows all available volumes:

$ nova volume-list +----+-----------+--------------+------+-------------+-------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +----+-----------+--------------+------+-------------+-------------+ | 14 | available | mydata | 5 | None | | +----+-----------+--------------+------+-------------+-------------+

The volume is now created and can be attached to a VM.

4.2. Attaching Volumes

Attaching is the process of associating a volume with a given instance, so the volume is seen as a new block device in the VM. The command to attach the volume is nova volume-attach, and the parameters are:

- the id of the VM

- the id of the volume

the local block device where the volume will be attached. These devices are in the form /dev/xvd<DEVICE_LETTER>, where <DEVICE_LETTER> goes from c to z (/dev/xvdc, /dev/xvdd, ..., /dev/xvdz)

For example:

$ nova volume-attach 9f870141-638e-4eb9-a2fa-ec770d1edb79 14 /dev/xvdc +----------+-------+ | Property | Value | +----------+-------+ | id | 14 | | volumeId | 14 | +----------+-------+

nova volume-list should now show that the volume is attached:

$ nova volume-list +----+--------+--------------+------+-------------+--------------------------------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +----+--------+--------------+------+-------------+--------------------------------------+ | 14 | in-use | mydata | 5 | None | 9f870141-638e-4eb9-a2fa-ec770d1edb79 | +----+--------+--------------+------+-------------+--------------------------------------+

Log into your VM and check with dmesg that the volume is now attached:

[root@testvm ~]# fdisk -l | grep Disk Disk /dev/xvda doesn't contain a valid partition table Disk /dev/xvdc doesn't contain a valid partition table Disk /dev/xvda: 3220 MB, 3220176896 bytes Disk identifier: 0x00000000 Disk /dev/xvdc: 5368 MB, 5368709120 bytes Disk identifier: 0x00000000

Volumes are created without any kind of filesystem, you will need to create one the first time that you use it. A single ext4 partition should be enough for most use cases. You can create such filesystem with this command from your VM: mkfs.ext4 /dev/xvdc (change /dev/xvdc if needed)

Now you can mount your volume (for example in /srv) and start using it:

[root@testvm ~]# mount -t ext4 -o sync /dev/xvdc /srv [root@testvm ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/xvda 3.0G 790M 2.1G 28% / tmpfs 245M 0 245M 0% /dev/shm /dev/xvdc 5.0G 138M 4.6G 3% /srv

4.3. Detaching Volumes

Once you are done with the volume, you can umount it at your VM:

[root@testvm ~]# umount /dev/xvdc

And detach it from the VM with nova detach with the VM and Volume id as arguments:

$ nova volume-detach 9f870141-638e-4eb9-a2fa-ec770d1edb79 14

The volume should appear again as available when you list it:

$ nova volume-list +----+-----------+--------------+------+-------------+-------------+ | ID | Status | Display Name | Size | Volume Type | Attached to | +----+-----------+--------------+------+-------------+-------------+ | 14 | available | mydata | 5 | None | | +----+-----------+--------------+------+-------------+-------------+

4.4. Deleting volumes

You can reuse as many times as you like your volumes in your VMs, the data stored in them will persist after you have destroy your VMs. If you no longer need one of your volumes, you can do so with the nova volume-delete command. Once you delete a volume, you will not be able to access to its data again!.

$ nova-delete 9

5. Networking

All created VMs have a private IP accessible from the GRIDUI. If you need access to the machine from outside GRIDUI, there are two alternatives: using a VPN or assigning public IPs to the VMs.

5.1. VPN

VPNs are currently being tested in the infrastructure. The documentation will be updated as soon as the features are available.

5.2. Public IPs

IFCA provides a pool of public IPs to use them in the cloud service. These can be allocated for your use and assigned to your VMs. Please note that the number of public IPs is limited!

New IPs are created with nova floating-ip-create:

$ nova floating-ip-create +----------------+-------------+----------+------+ | Ip | Instance Id | Fixed Ip | Pool | +----------------+-------------+----------+------+ | 193.146.75.142 | None | None | nova | +----------------+-------------+----------+------+

You can get the list of the current available IPs with nova floation-ip-list:

$ nova floating-ip-list +----------------+-------------+----------+------+ | Ip | Instance Id | Fixed Ip | Pool | +----------------+-------------+----------+------+ | 193.146.75.142 | None | None | nova | +----------------+-------------+----------+------+

This newly allocated IP can now be associated to a running VM with nova add-floating-ip <VM ID> <IP>:

$ nova add-floating-ip 9f870141-638e-4eb9-a2fa-ec770d1edb79 193.146.75.142

The list command will show that the IP is assigned:

$ nova floating-ip-list +----------------+--------------------------------------+------------+------+ | Ip | Instance Id | Fixed Ip | Pool | +----------------+--------------------------------------+------------+------+ | 193.146.75.142 | 9f870141-638e-4eb9-a2fa-ec770d1edb79 | 172.16.2.9 | nova | +----------------+--------------------------------------+------------+------+

And you will be able to connect to the machine with this new IP:

$ ssh -i cloudkey.pem root@193.146.75.142 The authenticity of host '193.146.75.142 (193.146.75.142)' can't be established. RSA key fingerprint is 29:80:9b:28:e7:8a:00:fe:6c:60:ef:e6:a6:71:33:bd. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '193.146.75.142' (RSA) to the list of known hosts. Last login: Fri Sep 28 16:54:40 2012 from gridui02.ifca.es [root@testvm ~]#

IPs can be disassociated from the VM with the nova remove-floating-ip <VM ID> <IP>:

$ nova remove-floating-ip 9f870141-638e-4eb9-a2fa-ec770d1edb79 193.146.75.142

IPs can be associated/disassociated as many times as needed to any VM you may have running. When no longer needed, release the IP address (i.e. it will no longer be available four your use):

$ nova floating-ip-delete 193.146.75.142

6. Euca commands

This document is based in the nova native commands of OpenStack, but you can also use the euca- commands that use the EC2 API instead of the OpenStack one. Credentials for EC2 can be obtained from the web portal. You can download a zip file and unzip it into your GRIDUI home. It contains some certificates and a shell script with the variables needed for running the euca commands. Source it before attempting any command:

$ . ec2rc.sh

The process for managing your VMs is practically identical, but you need to be aware that different identifiers are used at each system. For example, showing all the available images is performed with euca-describe-images. The id to use when starting the image is in the form ami-xxxxxxxx:

$ euca-describe-images IMAGE ami-00000001 None (IFCA Scientific Linux 5.5 JeOS) available public machine instance-store IMAGE ami-00000002 None (IFCA Scientific Linux 5.5 + ROOT v5.30.00) available public machine instance-store IMAGE ami-00000003 None (IFCA Ubuntu Server 10.04 JeOS) available public machine instance-store IMAGE ami-00000004 None (IFCA Debian Wheezy (2011-08) JeOS) available publicmachine instance-store IMAGE ami-00000005 None (cloudpipe) available public machine instance-store IMAGE ami-00000006 None (IFCA Scientific Linux 5.5 + PROOF v5.30.00) available public machine instance-store IMAGE ami-00000007 None (IFCA Ubuntu Server 10.04 JeOS) available public machine instance-store IMAGE ami-00000008 None (IFCA openSUSE 11.4 JeOS) available public machine instance-store IMAGE ami-00000009 None (IFCA openSUSE 11.4 + Compilers) available public machine instance-store IMAGE ami-0000000a None (IFCA openSUSE 11.4 + Compilers + Mathematica) available public machine instance-store IMAGE ami-0000000b None (IFCA Ubuntu Server 11.10 JeOS) available public machine instance-store IMAGE ami-0000000c None (Fedora 15) available public machine aki-0000000d ari-0000000e instance-store IMAGE aki-0000000d None (Fedora 15 kernel) available private kernel instance-store IMAGE ari-0000000e None (Fedora 15 initrd) available private ramdisk instance-store IMAGE ari-00000011 None (Fedora 17 initrd) available private ramdisk instance-store IMAGE aki-00000010 None (Fedora 17 kernel) available private kernel instance-store IMAGE ami-0000000f None (Fedora 17) available public machine aki-00000010 ari-00000011 instance-store IMAGE ami-00000012 None (Fedora 17 old glibc) available public machine aki-00000010 ari-00000011 instance-store IMAGE ami-00000017 None (IFCA Scientific Linux 6.2 JeOS) available public machine instance-store IMAGE aki-00000023 None (ubuntu 12.04 kernel) available public kernel instance-store IMAGE ami-00000022 None (Ubuntu 12.04 JeOS) available public machine instance-store IMAGE aki-00000025 None (Ubuntu 11.10 kernel) available public kernel instance-store IMAGE ami-00000024 None (Ubuntu Server 11.10 JeOS) available public machine aki-00000025 instance-store

Booting a VM with:

runs IFCA Scientific Linux 5.5 JeOS (ID ami-00000001)

of size m1.tiny

with the key cloudkey

$ euca-run-instances -k cloudkey -t m1.tiny ami-00000001 RESERVATION r-d4722tgt c725b18d8d0643e7b410dc5a8d9ab554 default INSTANCE i-00000203 ami-00000001 server-515 server-515 pending cloudkey 2012-10-01T08:46:42.000Z None None

Again, note the different kind of identifiers. Any other euca commands referring to this VM should use i-00000203 as identifier.

The following table summarizes the main commands and their euca equivalents.

Action |

nova |

euca |

Create a keypair named cloudkey |

nova keypair-add cloudkey > cloudkey.pem |

euca-add-keypair cloudkey > cloudkey.pem |

List keypairs |

nova keypair-list |

euca-describe-keypairs |

List images |

nova image-list |

euca-describe-images |

List sizes |

nova flavor-list |

Not available |

Start VM |

nova boot --flavor <flavor_name> --image <image_id> --key-name <key_name> <VM_NAME> |

euca-run-instances -t <flavor_name> -k <key_name> ami-<AMI> |

List VMs |

nova list |

euca-describe-instances |

Show VM details |

nova show <vm_id> |

euca-describe-instances i-<vm_id> |

Delete VM |

nova delete <vm_id> |

euca-terminate-instances i-<vm_id> |

Create Volume |

nova volume-create <size in GB> |

euca-create-volume -s <size in GB> |

List Volume |

nova volume-list |

euca-describe-volumes |

Attach Volume |

nova volume-attach <vm_id> <vol_id> <local device> |

euca-attach-volume -i i-<vm_id> -d <local device> vol-<vol_id> |

Detach Volume |

nova volume-detach <vm_id> <vol_id> |

euca-detach-volme vol-<vol_id> |

Allocate IP |

nova floating-ip-create |

euca-allocate-address |

Associate IP |

nova add-floating-ip <vm_id> <IP> |

euca-associate-address -i i-<vm_id> <IP> |

List IPs |

nova floation-ip-list |

euca-describe-addresses |

Disassociate IP |

nova remove-floating-ip <vm_id> <IP> |

euca-disassociate-address <IP> |

Release IP |

nova floating-ip-delete <IP> |

euca-release-address <IP> |

7. Using the web portal

The OpenStack dashboard lets you perform all the operations described in this manual from your web browser.

NEDS REVISION

7.1. Creating a Machine with OpenStack

Go to http://portal.cloud.ifca.es to access to OpenStack system, which lets you to create a new image in the cloud.

7.2. Image and size selection

You should launch the image that you want to use (within a list of OS) and click “Launch”. A new popup window will be shown and you have to choose the configuration of the system (requirements, name of the server...).

7.3. Create SSH credentials

You must import or create a new key in order to access to that image. To do so go to “Access & Security” tab and click on Create or Import Keypair.

7.4. Connect to the server

In order to access throw ssh to the image, you must asign an IP to the instance. Click on “Access & Security” again and select “Allocate IP to project”. Choose the type of IP that you want to use and click “Allocate IP”. After that, you need to link that IP with your new image. Click on the button “Associate IP” of your new IP and select the instance that you have just created.

7.4.1. SSH Connection

Last step is to download the keypair that you have created or imported and move it to the machine that you will use to conect to the instance (gridui.ifca.es). Change permission to 600 and use the following command to connect:

$ ssh -i clave.pem root@cloud.image.IP

Done

7.5. VM Lifecycle

You can access your VM until you destroy it. Management is allowed thorugh some actions that you can see below:

Action |

Explanation |

View Log |

Shows system log in the browser |

Snapshot |

Creates a launchable new copy of a VM |

Pause Instance |

Pause VM without shutting down it |

Suspend Instance |

Shutdown VM. You can keep using it again from the point you suspend it. |

Reboot Instance |

Reboot VM |

Terminate Instance |

DESTROY VM. Once you click on this action, this VM won't be available anymore. |