|

⇤ ← Revision 1 as of 2011-06-15 13:53:46

Size: 3536

Comment:

|

Size: 3547

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 2: | Line 2: |

| Line 6: | Line 5: |

| Since mpi-start 1.0.4, users are able to select the following placement policies: | |

| Line 7: | Line 7: |

| Since mpi-start 1.0.4, users are able to select the following placement policies: * use a process per allocated slot (default), * specify the total number of processes to start, or |

* use a process per allocated slot (default), * specify the total number of processes to start, or |

| Line 12: | Line 11: |

| Being the last one the most appropriate for hybrid applications. The typical use case would be starting 1 single process per node and at each node start as many threads as allocated slots are available within that node. | Being the last one the most appropriate for hybrid applications. The typical use case would be starting 1 single process per node and at each node start as many threads as allocated slots are available within that node. |

| Line 15: | Line 14: |

| Line 19: | Line 17: |

| Line 28: | Line 27: |

| or using the newer syntax: | |

| Line 29: | Line 29: |

| or using the newer syntax: | |

| Line 33: | Line 32: |

| Line 37: | Line 35: |

| Line 43: | Line 40: |

| As with the total number of processes, both command line switches and environment may be used. In this case there are two different options: | |

| Line 44: | Line 42: |

As with the total number of processes, both command line switches and environment may be used. In this case there are two different options: |

|

| Line 50: | Line 46: |

| Line 59: | Line 56: |

| or: | |

| Line 60: | Line 58: |

| or: | |

| Line 64: | Line 61: |

| Line 77: | Line 73: |

| or: | |

| Line 78: | Line 75: |

| or: | |

| Line 82: | Line 78: |

| Line 85: | Line 80: |

| {{attachment:pnode.png}} | |

| Line 88: | Line 83: |

| MPI-Start 1.0.5 introduces more ways of controlling the processes placement. The previous options are supported, and 2 new more are available: | |

| Line 89: | Line 85: |

| MPI-Start 1.0.5 introduces more ways of controlling the processes placement. The previous options are supported, and 2 new more are available: |

Multicore and MPI-Start

MPI-Start uses by default the exact number of processes as slots allocated by the batch system, independently of the node they are located. While this behavior is fine for simple MPI applications, hybrid applications need better control of the location and number of processes to use while running.

MPI-Start 1.0.4

Since mpi-start 1.0.4, users are able to select the following placement policies:

- use a process per allocated slot (default),

- specify the total number of processes to start, or

- specify the number of processes to start at each node

Being the last one the most appropriate for hybrid applications. The typical use case would be starting 1 single process per node and at each node start as many threads as allocated slots are available within that node.

Total Number of Processes

In order to specify the total number of processes to use, mpi-start provides the -np option or the I2G_MPI_NP environment variable. Both methods are equivalent.

For example to start an application using exactly 12 processes (independently of the allocated slots):

or using the newer syntax:

mpi-start -t openmpi -np 12 myapp arg1 arg2

The location of the processes is determined by the MPI implementation that you are using. Normally a round-robin policy is followed!

Processes per Host

Exclusive Execution

It is convenient to run your applications without sharing the Worker Nodes with other applications. Otherwise they can suffer for bad performance. In order to do so, in EMI CREAM you can use WholeNodes=True in your JDL.

As with the total number of processes, both command line switches and environment may be used. In this case there are two different options:

Specify the number of processes per host, with the -npnode command line switch or the I2G_MPI_PER_NODE variable.

Use the -pnode or I2G_MPI_SINGLE_PROCESS to start only 1 process per host (This is equivalent to -npnode 1 or I2G_MPI_PER_NODE=1

For example, to start an application using a 2 processes per node (independently of the allocated slots):

or:

mpi-start -t openmpi -npnode 2 myapp arg1 arg2

In the case of using a single processes per host, the Open MP hook (defining MPI_USE_OMP=1) may be also used in order to let MPI-Start configure the number of OpenMP threads that should be started:

or:

mpi-start -t openmpi -pnode -d MPI_USE_OMP=1 myapp arg1 arg2

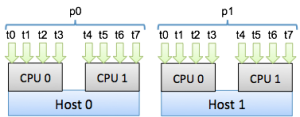

This is the typical case of hybrid MPI/OpenMP application as shown in the figure:

MPI-Start 1.0.5

MPI-Start 1.0.5 introduces more ways of controlling the processes placement. The previous options are supported, and 2 new more are available:

Start one process per CPU socket (threads would be started per each core in the CPU), with the -psocket option

Start one process per core (no threads), with the -pcore option.